Upload Python Package in Lambda Layer

Today we will learn, how to create and upload python package in lambda layer.

First we will create lambda fuction in python which will create our python package and upload that in S3 bucket.

Step 1: Open you AWS account.

Step 2: Open IAM service console.

Step 3: Create IAM policy for an IAM Role.

- Copy the below policy and paste it in your IAM policy.

1{

2 "Version": "2012-10-17",

3 "Statement": [

4 {

5 "Sid": "VisualEditor0",

6 "Effect": "Allow",

7 "Action": "logs:*",

8 "Resource": "arn:aws:logs:*:*:log-group:*:log-stream:*"

9 },

10 {

11 "Sid": "VisualEditor1",

12 "Effect": "Allow",

13 "Action": [

14 "ses:*",

15 "logs:*"

16 ],

17 "Resource": [

18 "arn:aws:s3:::*",

19 "arn:aws:logs:*:*:log-group:*"

20 ]

21 }

22 ]

23}

Note: To know how to create a custom IAM policy , Please follow this link

Step 4: Create IAM Role and Attach the above IAM policy.

Note: To know how to create IAM Role, Please follow the this link

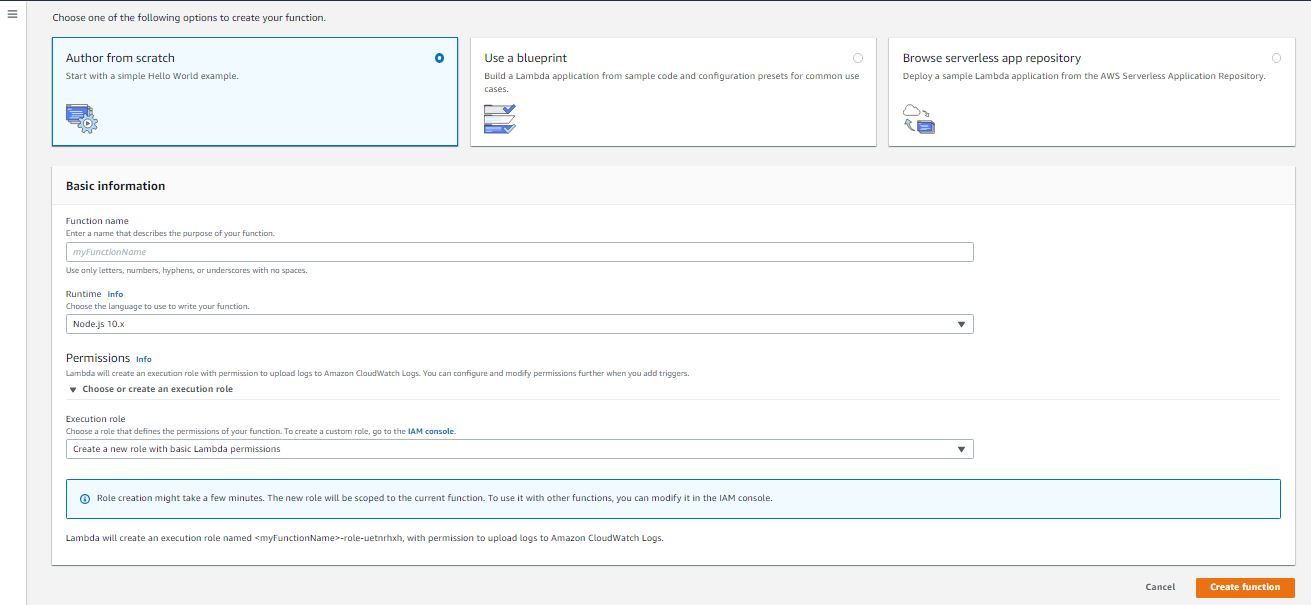

Step 5: Open Lambda console.

Step 6: Create Lambda Function.

To create an Lambda Function, Go to Lambda service from AWS console and create a new Function.

- Add Lambda Function name. (You can write any name).

- In Runtime info, Choose "Python 3.9".

- In permission, Choose "Use an existing Role" in Execution Role

- In Existing Role, choose the IAM role which you have create above for this Lambda Function.

- Click on "Create Function".

- Go to "Function Code" and Paste the below Python code in it.

1import subprocess

2import shutil

3import boto3

4import json

5import os

6

7def lambda_handler(event, context):

8 #========== Parameters ===================

9 pipPackage = "dnspython"

10 packageVersion = "2.27.1"

11 s3Bucket = "lambda-python-libs-packages"

12 #========================================

13 os.makedirs("/tmp/python/python/lib/python3.9/site-packages/")

14 #Pip install the package, then zip it

15 #subprocess.call(f'pip3 install {pipPackage}=={packageVersion} -t /tmp/python/python/lib/python3.9/site-packages/ --no-cache-dir'.split(), stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL)

16 subprocess.call(f'pip3 install {pipPackage} -t /tmp/python/python/lib/python3.9/site-packages/ --no-cache-dir'.split(), stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL)

17 shutil.make_archive("/tmp/python", 'zip', "/tmp/python")

18 #Put the zip in your S3 Bucket

19 S3 = boto3.resource('s3')

20 try:

21 S3.meta.client.upload_file('/tmp/python.zip', s3Bucket, f'{pipPackage}/{packageVersion}/python.zip')

22 except Exception as exception:

23 print('Oops, Exception: ', exception)

24

25 return {'statusCode': 200,'body': json.dumps('Success!')}

Note: Don't forget to change the parameters. like S3 bucket name, Package name, Package version.

Step 7: Save the Lambda Function and run the code to upload the package in S3.

Now, We are ready to upload the package in Lambda Layer .

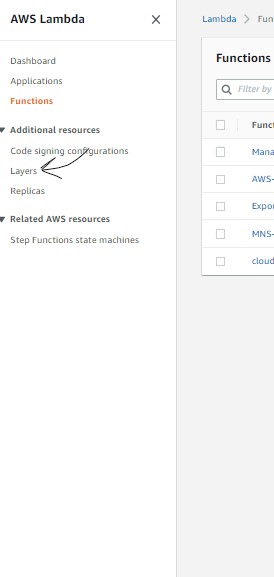

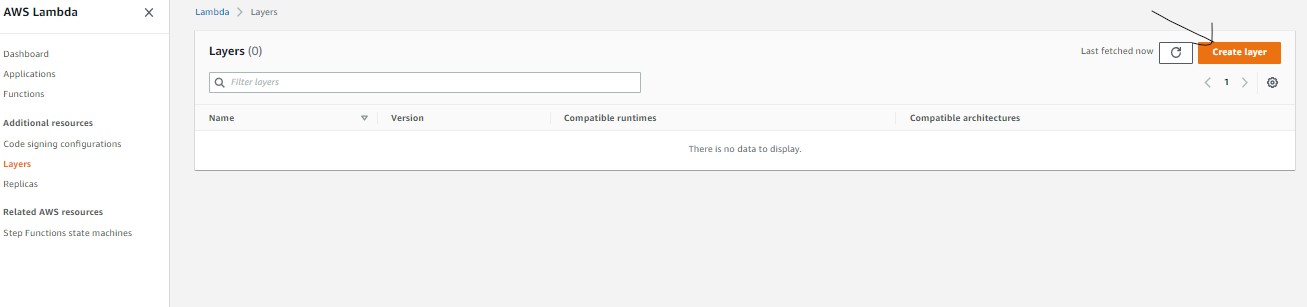

Step 1: Click on Layer from the Lambda console side menu.

Step 2: Click on Create Layer button.

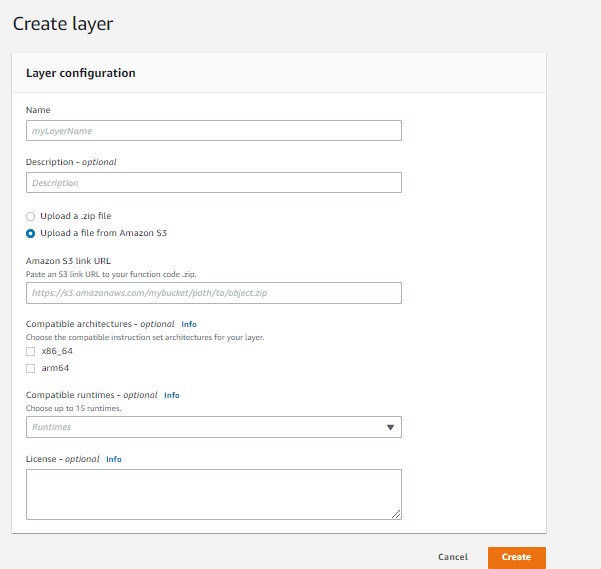

Step 3: Enter the details and create the layers.

Name: Enter the lib/package name

Description: Enter the some details of your lib/package.

Amazon S3 link URL: Enter the S3 URL of you zip file created by above code.

Campatible Archietecture: Select the architecture on which you have created the lib. (Check the above lambda architecture)

Campatiable runtimes: Select the Python version for which you have created the lib.

License: Leave as it is.

Step 4: Click on create button. Now, you lib/package is uploaded.